Introduction

GoDaddy Auctions is part of the domain aftermarket. Typically, investors utilize the auction service to find expiring domains or domain listings from other users to grow their portfolio for investment purposes. A learning curve exists based on what to look for and how to find value. We at GoDaddy Auctions focus on solving customer problems with AI. We have launched an AI agent on the GoDaddy Auctions page (AI Domain Assistant) to help solve some of the most common customer problems.

One of our goals is to enable customer success with inventory discovery, idea generation, watch list management, and the purchase journey. We have trained the agent to help not just the seasoned investors, but also those just getting into investing. We want to make it easy no matter your experience level. The agent exposes some of our searching APIs in ways that are quicker to develop than a full-blown UI while also providing more granular controls to a user; which is great for experimenting. For example, you can query out Majestic data integration via the agent but is not something we have yet prioritized in the UI.

Natural conversation flow

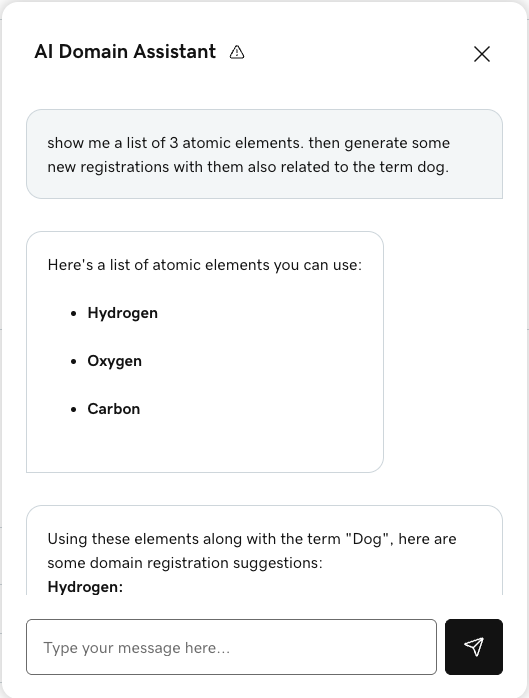

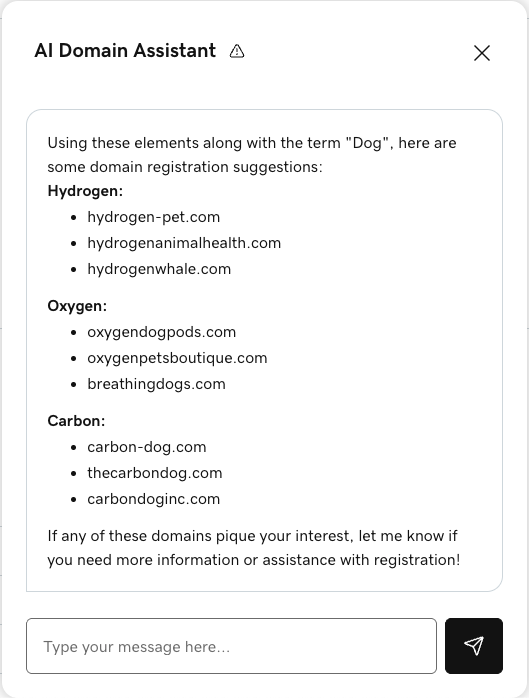

One of the agent concepts that originally opened our minds was chat as a tool. This gives our agent the liberty to decide what content to give the user and when. While simple, this notion allows the conversation to feel much more natural. Rather than a back-and-forth, ping-pong flow, the agent is able to send the user multiple messages helping it be more friendly and informative in a step-by-step manner. The following is an excerpt highlighting the agent's level of communication and the generative discovery capabilities:

If you think about a human to human conversation there is non-sequential back and forth. You see that the agent first replies about the elements that it generated. It could have asked for confirmation but decided to go straight to results (from conversational cues). This gives the user something to think about and collaborate with. In the screenshots there is no notion of time, but there are natural pauses between each message to break up the amount of data for the user.

Tool definition

We accommodate for back-and-forth communication in our agent and system design. First, teach the agent through tool definitions (how to send a message versus asking a question and waiting for a response). Depending on how you structure your project, you can achieve this as one tool with actions or more than one tool. Our team has discussed how to enhance this approach to grant the agent more flexibility in future iterations. For example, letting the agent decide that it wants to offer a dropdown box and a set of checkboxes to best collect user input.

The following is a simple tool definition that allows an agent to handle communication flow:

name: Chat

id: chat

description: |-

This tool should be used for communication with the user. For the `action`:

* `input`: is used to request input from the user.

* `output`: used to send a message to the user.

parameters:

properties:

action:

description: Action is the chat action to take.

enum:

- input

- output

type: string

body:

oneOf:

- "$ref": "#/$defs/InformArguments"

- "$ref": "#/$defs/RequestArguments"

required:

- action

- body

type: object

"$defs":

OutputArguments:

description: |-

Sends a message to the user. Use this tool to convey information back to the user

or to prompt them for further action.

properties:

message:

type: string

required:

- message

type: object

InputArguments:

description: |-

Requests input from the user and pauses agent execution. Use this tool after you

have asked the user a question and need a response before proceeding.

properties: { }

type: objectThe tool uses two actions: an output and an input action. The output action sends messages to the user for conveying information or prompting user action. The input action requests input from the user and pauses agent execution until the user responds.

System design

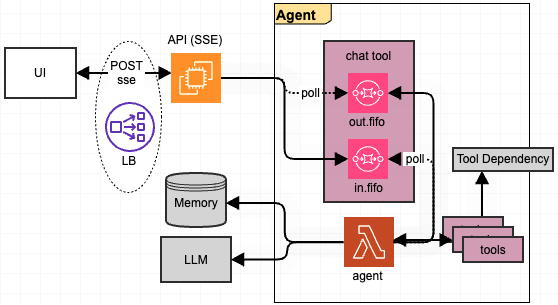

After deciding how to reply, the agent has to handle the streaming response for one request. We implemented Server Sent Events (SSE) to cover this pattern. SSE offers the most stability with a good blend of system complexity and user experience. We isolate this complexity between our API and the user interface. We do not need to deal with streaming to the agent or from the LLM because this approach represents those events as multiple tool calls. We have experimented with SSE, WebSockets and HTTP POST requests, and being able to switch any of these in and out proves our design to be flexible and robust. The following image shows the basic system architecture:

Our agent operates as an independent entity while an API fronts the agent managing the SSE interface and its connections. As a result, the agent itself is stateless. We interface it with an LLM provider and a GoDaddy memory service. For a given conversation, we receive the next message, look up the whole conversation, send a determined subset of the conversation to the LLM, and finish up by executing tools. Chat is also a tool call, so this acted as an infinite loop, pausing to wait for user input. We started this project before MCP was widespread, and as a result our tools interface with APIs directly. Converting to use MCP where it makes sense is something in our backlog. Similarly, we can easily plug in an agent-to-agent API interface for future extensibility.

Prompt security

Agent hijacking is a strong concern for us. Hijacking would allow rogue messages into a conversation to maintain the facade of working correctly while also skimming data or performing other nefarious actions. We don't want our agent used as a tool to attack other systems or people. Our prompt security and safety measures will help mitigate any such attempts. And our prompt safety monitoring will also provide us with insights to learn and improve our mitigation strategies.

We spent a good deal of time talking to our agent, trying to get it to take inappropriate actions. This could range from providing information unrelated to the topics of domain investing, or worse, exposing parts of GoDaddy's inner-workings. LLMs are just as susceptible to social engineering as we are. Imposing tactics, such as a sense of urgency, can trick it just as easily as a human. We found that being explicit in what the agent can do is generally not enough. Instead, we stated information the agent cannot share, spread over the system prompt and tool definitions.

In our system prompt we defined Security and Privacy sections with high level aspects related to focus, technical privacy, tool privacy, and conversation redirection. This was enough to guard the agent during a conversation, even when the user started to get pushy. On top of that, in our tool definitions we also defined a Security section to remind the agent what not to expose and possibly how to react. While impossible to perfectly lock a free-form chat agent down, attention and diligence goes a long way.

Lastly, we have a notion of prompt safety, scoring prompts based on topics like explicitness, sexuality, and even personal information. High enough scores lead to flagging so we can then investigate and learn. That data will show us if our agent holds its own, or maybe we need to tune our prompts further for better protection, or if a user attempted something malicious and needs investigation for possible suspension. This is all data that we are collecting and will digest and experiment on.

Conclusion

Part of the challenge of agentic offerings is making it feel natural. The more liberty you can give an agent the more useful it can become. Achieving this through the chat is at the forefront of the experience. Teaching an agent to communicate freely is one way we are achieving this. We'll always uncover oddities and edge cases, but careful prompt tuning keeps those to a minimum. On top of that, attention to prompt security and safety allows us to keep a close eye if the agent performs unexpected actions. Keeping these concepts in mind with our architecture lets us easily discover, iterate, and experiment.

In the future we plan to add more capabilities to our agent, making it even easier to perform auctions-related activities, including automating some flows.