Key takeaways

- Context overload, staleness, and emotional blindness undermine AI agents. Dumping full histories creates noise, outdated data erodes trust, and missing emotional cues frustrates users.

- Sliding-window summarization keeps agents coherent at low latency by segmenting conversations into 5–10 turn windows and merging summaries incrementally to avoid reprocessing entire histories.

- Negative sentiment triggers action, not just labels. Detecting frustration patterns enables tone adjustments, proactive fixes, or human escalation before experiences degrade.

Picture this: an AI agent confidently tells a customer their domain renewal is "all set" based on week-old cache data, while the customer stares at an expired website. Or imagine an agent that responds to an increasingly frustrated user with the same cheerful tone, completely missing the escalating emotional cues. These aren't hypothetical scenarios — they're the reality of AI systems that lack true contextual awareness.

In multi-agent AI systems, context isn't just data; it's a dynamic, engineered construct that can make or break user experience. Naively populating a prompt with historical data creates context collision, hallucinations, and escalating operational costs. True contextual awareness requires a robust architecture that delivers the right information, to the right agent, at the right time.

At GoDaddy, we are building a lightweight memory service (Agent State Memory) that enables AI agents to collaborate seamlessly. Our journey began with a fundamental question: how do we give our agents not just memory, but wisdom about what matters most in each interaction?

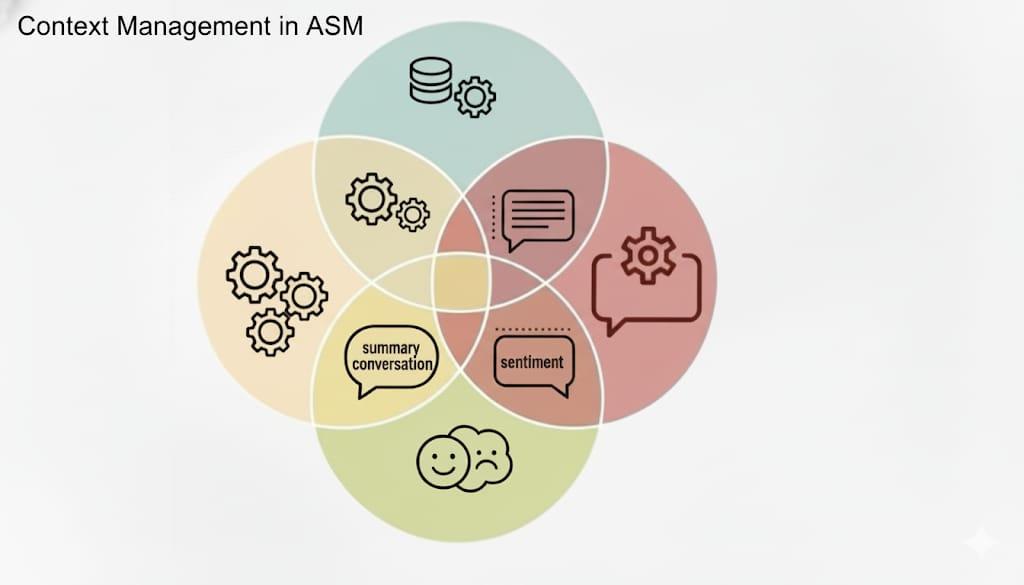

The answer lies in context engineering, and it starts with getting two foundational elements right: understanding what happened (dynamic conversation summaries) and how users feel about it (evolving sentiment analysis). These modules provide our agents with both the factual narrative and the emotional trajectory of every interaction, creating the bedrock for all future agentic capabilities.

The hidden complexity of AI context

Keeping an AI agent coherent in a live conversation is harder than it looks. Our first lesson was context overload: dumping entire histories — past interactions, settings, and telemetry — into every prompt creates noise, drives up cost, and introduces conflicting signals. Early prototypes confidently latched onto details from weeks-old exchanges that no longer mattered. We then hit context staleness, the quiet source of hallucinations. When an agent acts on outdated facts — like last week’s account state — it can be more harmful than acting with no extra context at all. Trust erodes quickly when the system sounds sure but is wrong. Finally, there is emotional blindness. Agents excel at parsing the explicit content of user inputs (what is said) but frequently overlook the underlying emotional cues (how it’s said) unless explicitly prompted to do so. As a result, they often ignore escalating frustration, sarcasm, or confusion within a conversation. This limitation leads to responses that are less effective at resolving user issues. Our answer is not “more data”, but better curation. We built Agent State Memory that keeps just-enough, fresh context: rolling conversation summaries that capture the facts and sentiment trends that capture the mood. Agents read this on each turn, so responses stay focused, current, and sensitive to the user’s state — without bloating the prompt.

Shaping Agentic AI with conversation summaries and sentiment awareness

To address the challenges we discussed earlier, we started with two foundational layers in our memory service — conversation summaries and sentiment analysis — because they solve immediate, critical problems that every customer-facing AI system encounters. Conversation summaries provide a concise, running brief of each interaction, creating a shared “source of truth” that lets agents maintain continuity over long conversations and enables seamless hand-offs between specialized agents as our system evolves. Sentiment analysis acts as our emotional barometer. By tracking sentiment trends throughout a conversation, our system can detect when a user is becoming frustrated, allowing agents to dynamically adjust their tone. Over time, this capability will trigger automatic escalation to human experts before frustration boils over into a negative experience. Together, summaries and sentiment keep responses focused, current, and empathetic without bloating the prompt. In the next section, we’ll show how these signals flow through the system and stay fresh without slowing agents down.

Negative sentiment as an action signal, not just a label

While sentiment analysis tracks the full emotional trajectory of conversations, negative sentiment detection would be our highest-impact signal. Consider a typical scenario: a user tries to update their DNS settings three times, each attempt failing with cryptic error messages. By the third try, their language shifts from polite inquiry to sharp frustration.

Our system flags this pattern through multiple signals. It detects repeated failed attempts, monitors for impatient language, and tracks escalating emotional indicators. This enables proactive intervention — adjusting the agent's tone, providing more detailed explanations, or triggering escalation before frustration boils over into a support nightmare. Beyond individual interactions, negative sentiment becomes powerful business intelligence. When multiple users complain about the same feature, the long-term memory correlates these signals with the internal issue trackers, turning scattered support tickets into actionable product feedback. Most critically, negative sentiment serves as an early warning system for churn, giving our agents opportunities to intervene with targeted solutions.

At a glance, this lets us:

- Detect deteriorating experiences

- Identify systemic gaps

- Quantify business impact by linking frustration patterns to churn, CSAT, and repeat contacts

The following table gives an example of how these signals can be operationalized. Exact policies vary by product and channel, but the pattern is the same: detect → respond → learn.

| Example signal we detect | Example policy response | Analytics captured |

|---|---|---|

| Same request repeats across three turns; short replies like “still not working”; downward sentiment trend | Apologize and restate a clear plan; auto-verify the relevant setting and attach the result | Trigger type, turn IDs, evidence snippet, action taken, resolution outcome |

| Unresolved item persists for two or more windows; faster user reply times (impatience proxy) | Offer a fast path; propose human hand-off if verification fails | Time-to-resolution impact, hand-off effectiveness, loop root cause |

| Multiple conversations show the same pattern around one feature | Flag to product/ops; correlate with incident/bug tracker | Issue frequency by feature, deflection/churn correlation |

These negative signals provide important advantages for subsequent analysis and response management.

- Analytics: every trigger is logged with evidence and outcome, enabling PMs to spot hot spots, tune thresholds, and prioritize fixes.

- Augmented actions: policies change the response, not just the score — de-escalating tone, running targeted checks, or routing to a human before the experience degrades.

Personalization grounded in sentiment

Our goal isn’t “more memory”; it’s better judgment. Sentiment tells us how a user is showing up (optimistic, cautious, frustrated) and becomes a control signal that adapts the next move.

In the short term, a rolling summary plus a sentiment trend sit in short-term memory steers behavior: keep momentum when things are positive; if the trend dips, shift tone, auto-verify likely failure points, and offer a faster path or human hand-off without bloating the prompt.

Over the long term, those same signals become lightweight semantic memory — preference and risk markers we can reuse without storing the full episode. Repeated dips around the same feature can predict friction and potential churn; consistent positive swings after certain steps show what actually delights. That lets us anticipate needs (“you’ve had trouble with auto-renew in the past; I’ve double-checked those settings”) and avoid the patterns that frustrate.

Put simply: sentiment guides personalization in the moment (short-term behavior) and teaches us how to personalize better over time (stable hints like preferences, risk flags). It lowers the chance we push frustrated users toward churn, and it highlights when a conversation is going well so we can replicate what made it work.

A fast and event-driven system that stays in sync

To keep the agent responsive while continuously learning from every interaction, we treat context enrichment as a background job: every five user–agent turns are batched into a window and sent to the context management system for summarization and sentiment updates. The context management system fetches the previous enrichment (summary + sentiment) from the memory service, combines it with the new five-turn window, and generates an updated summary and sentiment bundle. The result is written back as the latest enrichment.

Meanwhile, the agent always responds from the latest committed snapshot of memory. By the time it takes its next turn, it can retrieve this refreshed, enriched context and respond with deeper awareness. This design keeps conversations flowing naturally while the system quietly stays in sync.

Context intelligence modules

Our agent maintains coherence through a set of context intelligence modules that all share the same backbone: a sliding-window view of the conversation. Instead of feeding the full history to the model, we work over overlapping windows of 5–10 turns, extract the key facts and decisions from each window, and write them back as structured state. The following sections show how we apply this pattern in two modules: progressive conversation summaries and evolving sentiment analysis.

Progressive conversation summaries

Summarizing entire conversation histories sounds straightforward until you hit reality. It's computationally expensive and prone to factual drift. Our solution emerged from a key insight that conversations naturally segment into focused topics, and we can leverage this structure.

Consider a 20-turn conversation about SSL certificate issues. Instead of processing all turns at once, we segment the dialogue into fixed-size windows (typically 5-10 turns). The first window might cover initial problem description and basic troubleshooting. The second window could focus on advanced diagnostics. Each window gets summarized independently by an LLM-powered tool call, capturing the essential information without overwhelming the system. The real innovation happens in incremental merging. A separate process takes each new window summary and merges it with the previous rolling summary, creating a "summary of summaries" that evolves without reprocessing the entire dialogue. This approach maintains concise, evolving state while ensuring low latency; which is crucial for real-time interactions. Every summary uses structured output through tool calls with strict JSON schemas, capturing intents, entities, and unresolved issues. We validate quality by generating questions from the source window and verifying that our summaries contain correct answers — a simple but effective quality gate.

Sentiment as evolving context intelligence

Traditional sentiment analysis provides only snapshots (happy, neutral, frustrated). But real conversations are emotional journeys. Frustration builds, resolves, or escalates based on how effectively issues get addressed. Understanding this progression became critical for effective agent routing and intervention. We apply the same sliding-window approach we use for summaries. As the conversation unfolds, we run sentiment analysis over fixed-size windows (typically 5–10 turns) and roll those scores into an evolving view of the session, tracking both the current emotional state and cumulative trends.

- Rolling sentiment calculation: We maintain both per-window and overall sentiment, using a weighted decay so that fresh emotional signals count more than what was said earlier in the conversation.

- Frustration point collection: The system goes beyond simple sentiment scoring to identify specific frustration patterns. Embedded detectors flag repeated queries (like users asking the same question three times) escalating language, or unresolved loops where agents suggest identical solutions multiple times. Each detection includes forensic evidence: direct quotes, timestamps, and window attribution, which powers better hand-offs.

- Source of frustration: When frustration emerges, we trace back to root causes. Is it a product bug? Policy mismatch? Agent miscommunication? For example, if the user mentions "Your app crashed again," the source might be linked to a known software glitch, pulled from our integrated issue tracker.

- Trend analysis for proactive intervention: By comparing rolling sentiment across windows, the system classifies trends as improving, stable, or deteriorating. This enables proactive actions like escalating the case, switching channels, or adapting the agent’s behavior before the user churns.

- Structured output: All of this is surfaced through LLM tool calls with strict schema validation, including sentiment labels, confidence scores, dominant emotions, and actionable evidence. The result is a structured, auditable signal that downstream systems can trust.

Building resilient context systems

Building context systems isn’t just about adding intelligence, it’s about protecting truth. Along the way, we’ve learned that good context isn’t just accurate; it’s resilient. Three challenges stood out: poisoning, distraction, and confusion.

- Poisoning (small errors that spread): This happens when a small hallucination slips into a summary and spreads. We prevent this through schema validation and evidence linking — every claim must trace back to a verifiable quote and timestamp.

- Distraction (too much irrelevant detail): Models fail when they drown in noise. Our sliding-window design keeps scope tight, while prompts guide the LLM to focus on high-signal moments — unresolved issues, emotional spikes, and core intents.

- Confusion (conflicting sources): Context breaks down when sources disagree. We handle this with a clear hierarchy — recent context overrides older summaries — and run consistency checks before any enrichment reaches the agent.

These lessons taught us that reliable context isn’t just built — it’s curated, one safeguard at a time.

The journey continues from rigorous evaluation to persistent intelligence

This exploration of foundational context engineering is just the beginning of our memory service and context management layer. We have established reliable sentiment analysis and conversation summarization that meaningfully improve how our agents understand and respond to user interactions.

In our next post, we will focus on evaluation — how we measure and ensure the quality of contextual intelligence using metrics like faithful recall. We’ll share how our research-backed framework tracks faithfulness, recall, and real-world impact to keep our systems both accurate and trustworthy.

From there, the real transformation begins. We will explore the next architectural evolution: engineering modules for persistent, long-term memory. Building on this foundation, we will examine the transition from session-based awareness to persistent, long-term user context. We will detail how we leverage retrieval-augmented generation to securely surface relevant user history, enabling powerful personalization across both single sessions and a customer's entire journey with GoDaddy. Stay tuned as we continue to build a truly intelligent context engine for our multi-agent ecosystem.