Key takeaways

- GoDaddy processes 100,000+ daily customer service transcripts using AI to identify pain points and operational issues at company-wide scale

- Large language models can extract consistent insights from messy, conversational data at massive scale

- Automated analysis helps catch emerging issues in hours instead of weeks, reducing customer impact and operational costs

Background

GoDaddy serves millions of customers worldwide. At GoDaddy, delivering exceptional customer service is the heart of everything we do. When a customer contacts us, our dedicated Care Guides do everything they can to assist. The recorded interactions get converted into transcripts - a rich source of information that holds valuable insights into customer pain points, agent effectiveness, and opportunities for improvement or growth. We realize the potential to derive meaningful insights from this data and identify key call drivers and pain points.

These interactions are unstructured, high-volume, and span languages. Traditionally, these transcripts were manually reviewed by supervisors, managers, and analysts. While evaluating individual interactions can provide meaningful insights into specific cases, the method quickly becomes limiting. It's difficult to extract broad, company-wide intelligence from a handful of reviewed calls.

The key challenges we faced included massive scale (up to 100,000 English-language transcripts daily), the unstructured nature of conversations containing colloquial language and cross-topic shifts, latency requirements for actionable insights within hours rather than weeks, and operational constraints requiring compliance with security and privacy policies.

Lighthouse: GoDaddy's Internal AI Analytics Platform

Manual review and sampling approaches failed to provide comprehensive intelligence, and even targeted audits could not surface systemic issues or detect emerging problems quickly enough to influence daily operations. To address these challenges, we developed Lighthouse, a purpose-built internal analytics platform for large-scale analysis of unstructured customer interaction data using AI, large language models (LLMs), and lexical search. This innovation empowers teams across the organization to proactively identify pain points, accelerate issue resolution, and continuously improve products and services based on real-world customer feedback.

Core components

Building Lighthouse required solving three key challenges: extracting consistent insights from unstructured conversations, managing multiple AI models effectively, and processing massive data volumes at scale.

Prompt engineering framework

Early attempts with ad hoc prompts produced inconsistent results across similar conversations. To solve this, we built a versioned prompt library that enables systematic iteration with subject matter expert input.

Each prompt outputs structured JSON data for reliable downstream analytics. The versioning system ensures reproducibility and enables teams to track which prompt generated specific insights, making debugging and improvement much more manageable.

Model orchestration

No single LLM excels at every task - some models perform better at sentiment analysis, while others excel at product issue extraction. Other limitations include token limits and concurrent threshold limits. For example, we chose Claude v2 for our batch processing operations due to its favorable token limits and cost structure for high-volume analysis. Our pluggable system allows teams to select optimal models for their specific use cases.

A comprehensive evaluation framework continuously scores model responses against accuracy, relevance, and consistency metrics. This helps teams make data-driven model choices and detect performance degradation over time.

Currently, our system processes English-language transcripts, but we plan to expand to other languages as models improve their multilingual capabilities while maintaining the ability to provide insights in English for consistent reporting across the organization.

Scalable processing pipeline

Processing 100,000+ daily transcripts while maintaining low latency required an event-driven, serverless architecture. AWS Lambda provided the elasticity needed for variable workloads, while S3 separated raw and processed datasets with versioning for data integrity. These tools helped our pipeline process the full daily volume of 100k+ records in approximately 80 minutes.

GoCaaS (our internal LLM wrapper) centralizes LLM API management, OpenSearch enables fast filtering before expensive LLM processing, and Amazon QuickSight provides business users direct dashboard access to insights.

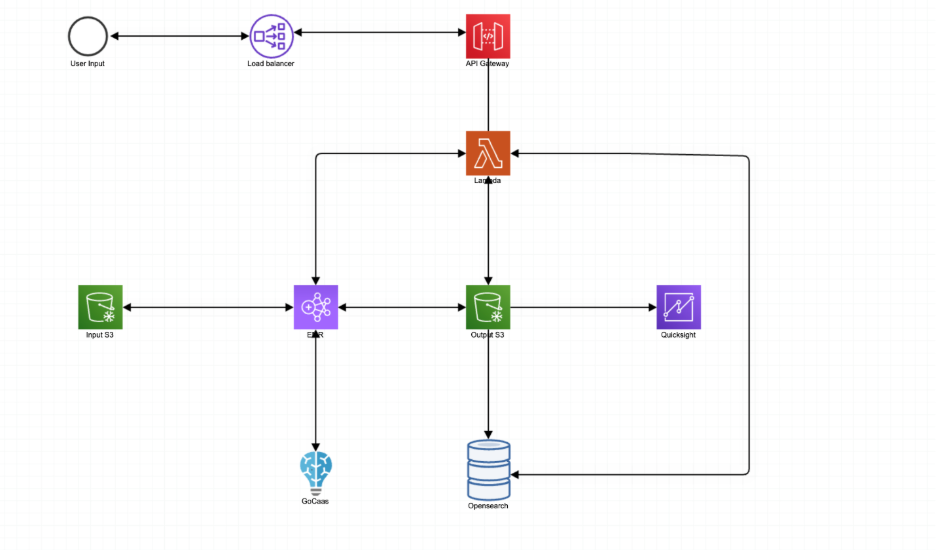

Architecture

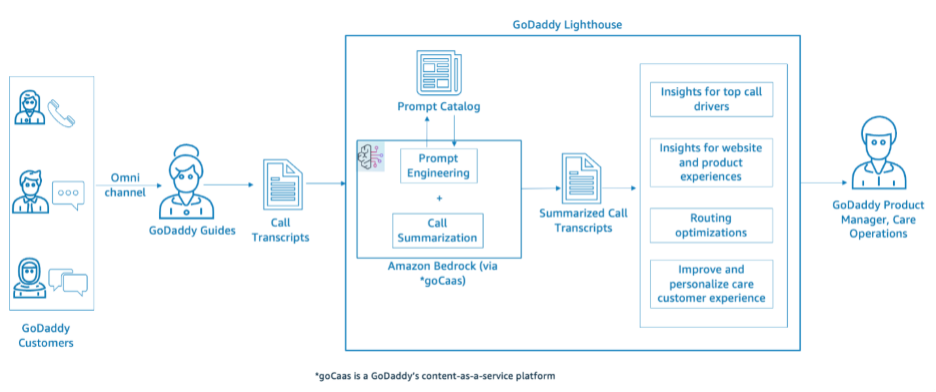

The following diagram outlines the Lighthouse architecture.

Lighthouse follows an event-driven, serverless architecture designed for scalability and reliability. User requests flow through a load balancer and API Gateway that handles routing, authentication, and throttling before reaching AWS Lambda functions that orchestrate the core processing logic.

Data flows through several key components: S3 manages storage with versioning and compliance support, GoCaaS centralizes model access across different AI providers, OpenSearch enables fast lexical filtering before expensive LLM processing, and QuickSight provides business users direct access to insights through familiar dashboard interfaces.

This design automatically scales based on demand while maintaining data integrity and enabling both real-time analysis and historical reprocessing as our methods evolve.

Key technical capabilities

Lighthouse combines several key capabilities to deliver reliable insights at scale. Our lexical search engine provides deterministic token-level matching with proximity controls, delivering sub-second response times over large datasets and enabling targeted filtering before expensive LLM processing.

For systematic analysis, we run pre-generated insight pipelines that perform scheduled ETL operations for category-based aggregation across topics, intents, and sentiment. Each prompt declares an output JSON schema with runtime validators that reject malformed completions and auto-retry with corrective hints, guaranteeing well-formed data for downstream tools. The system includes per-prompt concurrency controls, token budgets, and circuit breakers to protect upstream systems while delivering pre-computed distributions and anomaly detection.

When rapid interpretation is needed, our on-demand summarization capability augments search results with prompt-driven summaries, proving especially valuable for incident response and product issue triage. Finally, continuous prompt evaluation through regression-style benchmarking detects drift in output quality using automated tests against curated transcript sets.

Example use case: Targeted root cause analysis

The following scenario illustrates how Lighthouse solved an actual problem for GoDaddy in a short period of time that could have otherwise escalated into a much more significant issue. Scenario: Spike in customer calls on a particular day due to a customer-facing link not working as expected.

Workflow:

- Filter transcripts by geography, date, and product metadata via OpenSearch

- Pass filtered set to a domain-specific prompt in Lighthouse

- Generate structured insights (e.g., sentiment distribution, causal drivers)

Real-world impact: Through Lighthouse insights, GoDaddy was able to quickly identify this trending issue and rectify the problem before it escalated into a larger operational crisis. This early detection and resolution reduced further customer calls, decreased customer dissatisfaction, and prevented what could have been a significant service disruption. This demonstrates how automated analysis can surface critical issues that might otherwise go undetected until significant customer impact occurs.

Conclusion

Lighthouse converts massive, noisy, unstructured interaction datasets into structured, actionable intelligence with minimal manual intervention. By combining deterministic search, scalable data pipelines, and LLM-driven prompt engineering, GoDaddy has built an operational analytics system capable of both broad monitoring and highly targeted investigation.

By analyzing the distribution of root causes and customer intents across the high volume of daily calls handled by GoDaddy agents, within a week, Lighthouse identified the most common drivers of escalations and the key factors contributing to customer dissatisfaction. These insights provided a data-backed foundation for targeted operational enhancements and strategic initiatives. Insights generated by Lighthouse drive key business decisions, enabling teams to make critical improvements across departments.