Key takeaways

- Scaling A/B testing isn’t about running more experiments — it’s about enabling teams to learn faster while maintaining customer trust.

- GoDaddy’s growth in experimentation is powered by culture, collaboration, shared metrics, and platform capabilities like Hivemind.

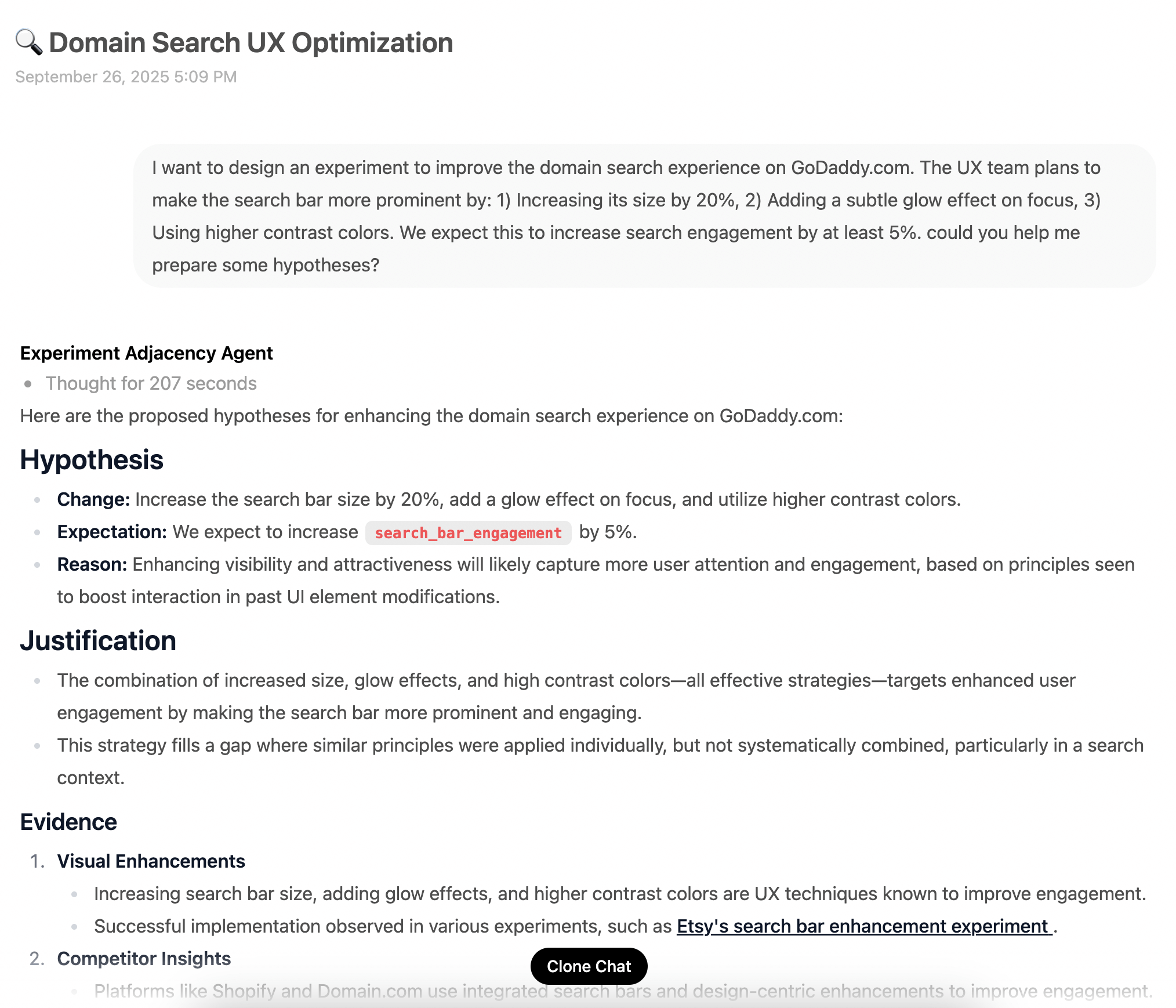

- As GoDaddy scales, AI plays an increasing role in hypothesis generation, experiment setup, analysis, and insight-sharing, strengthening the learning engine.

(Editor's note: This post is the last in a four part series that discusses experimentation at GoDaddy. You can read part one here, part two here, and part three here.)

Over the past year, we’ve explored how GoDaddy became a learning organization. In this final article of our 2025 series, we look at how GoDaddy scales experimentation and the mindset, systems, and metrics that turn learning into a company-wide capability.

As we outlined earlier, scaling A/B testing isn’t about running more experiments — it’s about creating the conditions for teams to learn faster while protecting customer trust. This approach has already contributed $1.6 billion in revenue growth.

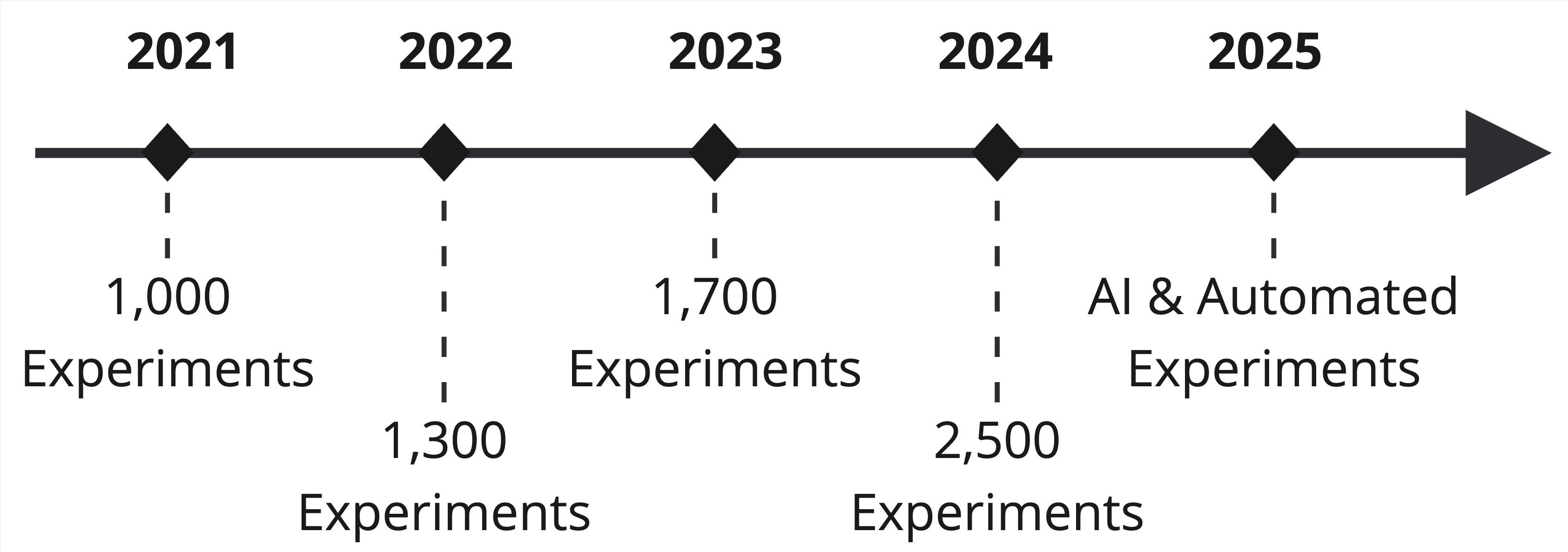

Journey to scale

GoDaddy’s experimentation foundation took shape over several years. Scaling A/B testing has been a gradual effort shaped by systems, habits, and technology.

2021: Foundations

GoDaddy ran about 1,000 experiments, establishing core workflows while migrating scorecards to Hivemind, our internal experimentation platform. Early capabilities included segmentation, new metrics, and the first sample-size calculator — beginning the democratization of experimentation tools and best practices.

2022: Building the platform

Experiment volume rose to 1,300+ as Hivemind became the primary configuration and reporting engine, replacing SplitIO. Teams gained standardized templates, custom and guardrail metrics, and dashboards tracking experiment health and throughput.

2023: Introducing intelligence

Experimentation exceeded 1,700 experiments. Hivemind expanded with automated badging, segmentation insights, AI-powered hypothesis suggestions, experiment summaries, and stronger integration across platforms. Peer reviews and showcase rituals matured, embedding experimentation deeper into workflows.

2024: Experimentation everywhere

GoDaddy surpassed 2,500 experiments. New features included the Continuous Deployment Environment (CDE) for measuring full-release impacts, a unified feature flag store, adjacency model, AI agent, and Company Experiment Metrics. Experimentation expanded to org, team, and portfolio-level monitoring.

2025: Scaling with intelligence

The focus has shifted from scaling volume to scaling insight. Hivemind’s AI surfaces hypotheses, flags experiment overlaps, and connects results across journeys. GoDaddy’s experimentation program has evolved into a self-improving learning engine, accelerating the time from idea to measured impact.

Operating model

Experimentation at GoDaddy is driven by people and the way we work together across three interconnected layers, each with its own focus and feedback loops. Together, these create a system that supports experimentation from company vision to daily execution. The following sections describe the experimentation operating model.

Culture (company level) — learning as a system

Rituals like the Experimentation Showcase and OKR alignment make insights visible and celebrated. Teams are encouraged to pursue bold ideas without fear of being wrong—the focus is on learning, not winning. Leadership reinforces curiosity and transparency through Company Experiment Health Metrics.

Collaboration (program level) — teams in sync

Shared templates, unified metrics like incremental gross cash receipt (iGCR), and Opportunity Solution Trees (OSTs) help teams learn in the same language. Peer reviews and cross-team forums spread best practices and avoid duplication. Programs provide governance and clarity, connecting local experiments to strategic priorities.

Execution (squad level) — experiments in action

Squads form around hypotheses, run rapid tests, and iterate quickly. Standardized lifecycles ensure every experiment meets shared quality and data-integrity standards.

At GoDaddy, we encourage every employee to invent, explore, and solve problems to improve our products for customers. Psychological safety is at the heart of this culture—when learning is the goal, not winning, teams feel free to take bold bets.

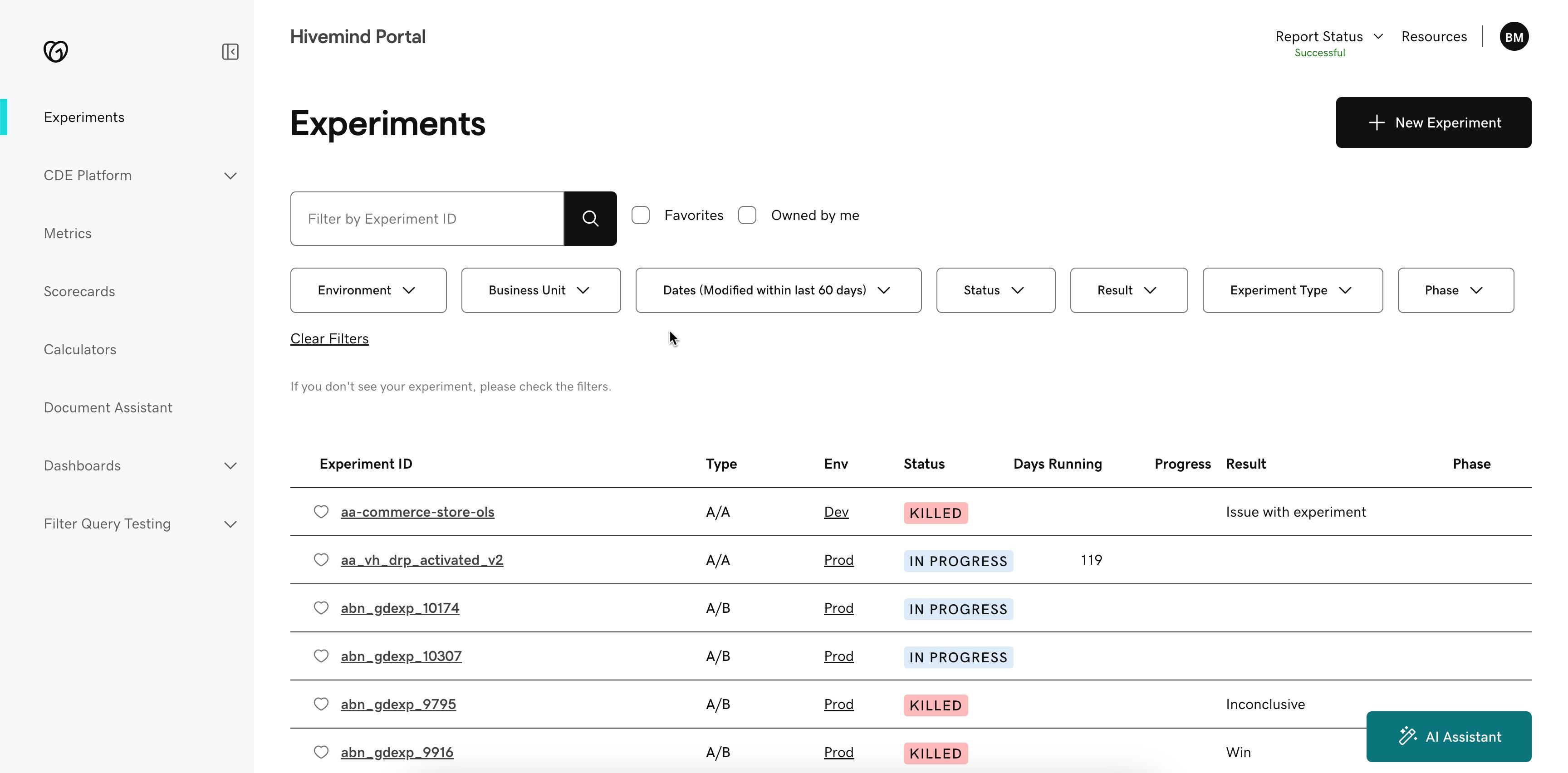

The following image shows GoDaddy’s internal experimentation platform, Hivemind:

What scaling means for GoDaddy

Our rituals guide responsible, high-velocity experimentation at scale. The following five principles balance speed, quality, and trust:

- Velocity with rigor — Learn faster without compromising statistical or ethical standards.

- Parallelization with control — Run multiple experiments safely through traffic governance.

- Unified metrics and visibility — Standardize reporting for clarity at all levels.

- Quality by design — Build best practices into the platform through automation and peer review.

- Portfolio impact — Connect experiments to company-wide outcomes, not just local wins.

Platform enablers

These technical capabilities make scaling experimentation possible.

| Scale Lever | Description | Business Impact | Customer Impact | Engineering Lift |

|---|---|---|---|---|

| CDE and feature flags | Visibility into release impact + ability to evaluate changes without full A/B tests | Faster, safer launches; dynamic risk mitigation | Reliable, low-friction releases | SDK, monitoring, alerting, unified UI |

| Adjacency model and AI agent | Surface experiment ideas and streamline setup | Accelerated ideation; reduced manual effort | More relevant, impactful experiments | Auto-scaling, API integration |

| Corporate experiment metrics | Standard measurement framework | Portfolio-level impact tracking, PandL visibility | Prevents negative surprises | Automated reporting, portfolio management |

| Parallelization and traffic governance | Coordinate experiment traffic | Faster decision-making | Stable customer experience | Allocation services, exclusion lists |

| Unified metrics and guardrails | Consistent measurement standards | Focus on durable value | Prevents regressions | Shared metric layer and alerting |

| Quality scoring and peer reviews | Quality signals and shared review processes | More conclusive tests | Safer, clearer changes | Templates and reviewer workflows |

| Post-rollout causal analysis | Reveal true impact after release | Confident scale-ups and rollbacks | Fewer long-tail issues | Automated impact detection |

These enablers provide the foundation — but scale emerges from how teams put them into practice through systemization.

Systemization

Systemization defines the routines, checkpoints, and shared practices that make experimentation reliable at scale. Platform features provide the tooling; systemization shapes the day-to-day behavior.

Lifecycle standards

Every experiment follows a shared lifecycle—from hypothesis pre-registration and power guidance, through guarded launch and sequential monitoring, to post-rollout causal checks. These steps keep insights rigorous while allowing teams to explore freely.

Quality scoring

Experiments earn quality badges — Bronze, Silver, Gold, Platinum — based on completeness and design quality. Lightweight peer reviews make quality a collaborative habit.

| Level | Requirements |

|---|---|

| Bronze | Configured in Hivemind and analyzed using a Hivemind scorecard |

| Silver | Bronze + documented hypothesis, decision/guardrail metrics, reasonable limits, alpha ≤ 0.2 |

| Gold | Silver + frequentist design with alpha ≤ 0.1 |

| Platinum | Gold + includes experiment duration calculation |

Knowledge flow

Shared summaries, dashboards, and automated readouts help insights travel quickly, forming a company-wide feedback loop.

AI-enabled coaching

Hivemind’s AI agent guides experiment authors in real time, improving design and analysis within their workflow.

Celebrating curiosity

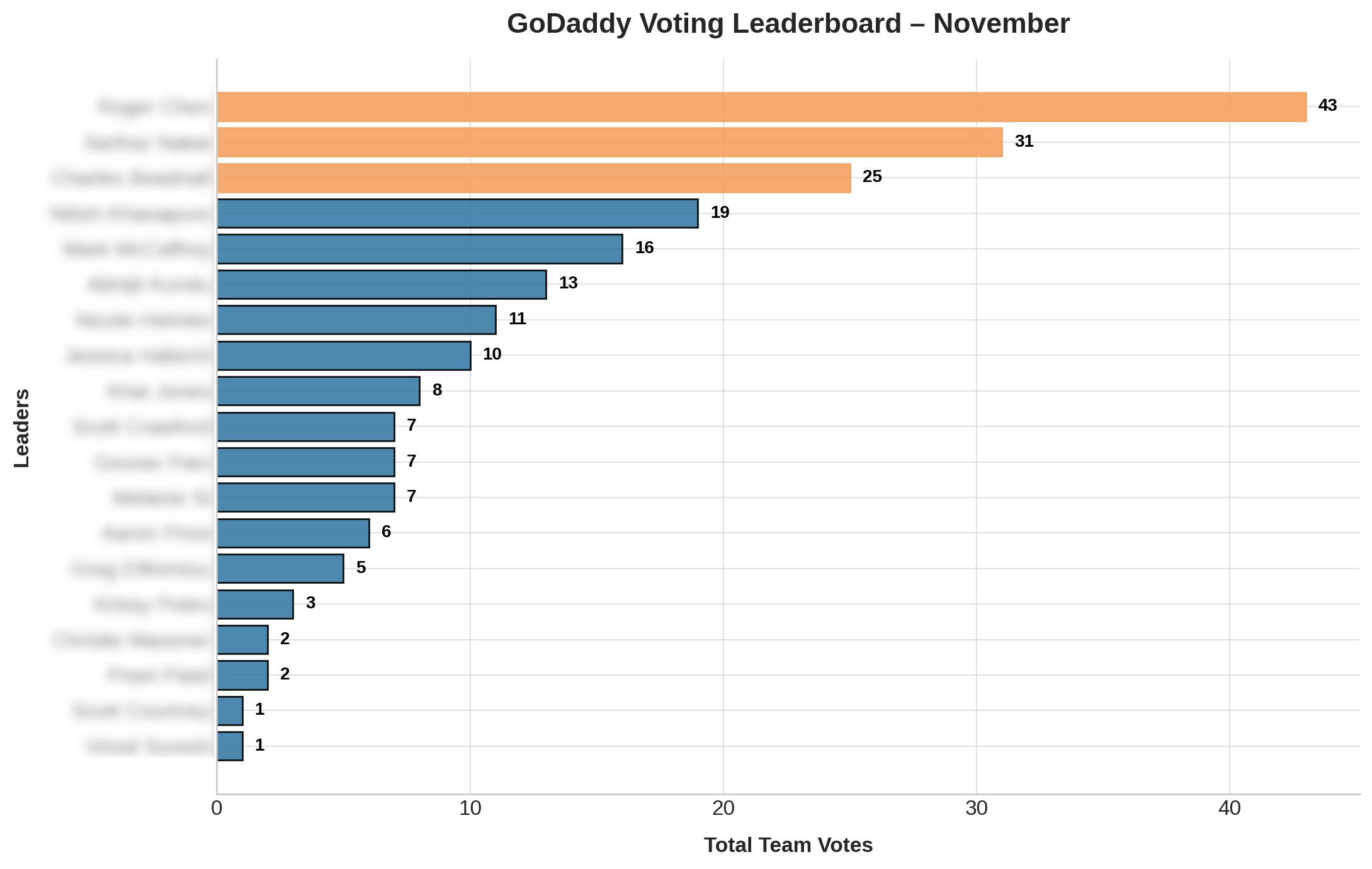

Showcases, storytelling, and voting leaderboards make learning visible and reinforce insight-over-outcome culture.

Tracking metrics

Company Experiment Health Metrics monitor velocity and quality at every level. After GoDaddy invested in quality and platform capabilities, inconclusive results fell and win rates rose—from under 5% to over 30% in two years.

Internal monitoring

Internal monitoring shows whether scaling is working by turning our metrics into a clear view of how experimentation performs across all levels of the organization.

The following table summarizes how we monitor experimentation health at the company, program, and squad levels.

| Level | What we measure |

|---|---|

| Company level | - Total experiments run - Time-to-decision (median) - Controlled vs. non-controlled ratio and conclusive outcomes - iGCR impact and guardrail compliance - % of positive, negative, and neutral learnings - Insight reuse across business units |

| Program level | - Exploration vs. optimization balance - Coverage across journeys and surfaces - Quality ladder distribution - Peer review participation - Collaboration through shared templates and initiatives |

| Squad level | - % of experiments using Hivemind features - Use of AI-enabled setup and causal analysis tools - Squad participation in showcases - Growth of self-service experimentation |

Challenges

Scaling A/B testing across a large organization is never frictionless. Key challenges shaped our process—and ultimately strengthened it.

Consistency across teams

Shared templates, peer reviews, and AI-guided setup helped standardize early variations in experiment design.

One challenge with A/B testing at GoDaddy is that it’s rarely as simple as flipping a switch. To run a clean test, teams need clear goals, good data, and coordination across a lot of moving parts. It can slow things down, but it’s also pushed us to work more closely together and build better habits around experimentation. The payoff is that when we do get a result, we can trust it and act on it with confidence. — Araz Javadov, Group Product Manager

Making metrics meaningful

Unified measurement turned fragmented visibility into alignment.

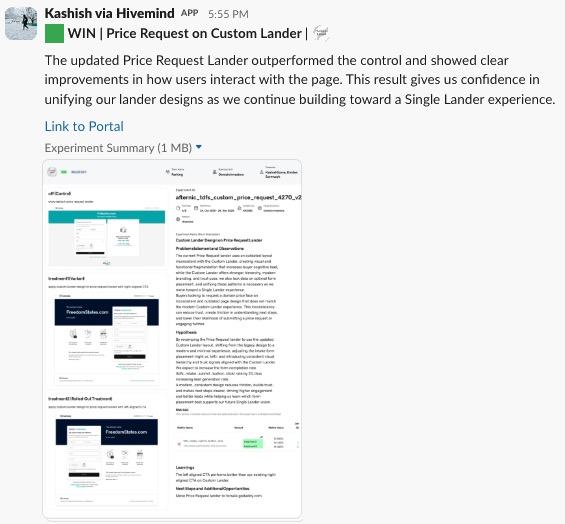

One of the biggest sources of friction our PMs faced was the overhead of sharing pre- and post-experiment results. Even though hypotheses and data already lived in Hivemind, PMs still had to recreate them in decks, present live, and then post screenshots and links across multiple channels. Based on PM feedback, Hivemind was updated to include a high-level experiment summary with the hypothesis, before-and-after visuals, and results in a single view, along with direct Slack sharing. This reduced duplication and allowed PMs to spend less time packaging outcomes and more time improving the customer experience. — Heather Stone, Director Product Management

Balancing speed and rigor

Lightweight reviews, quality scoring, and platform nudges enable teams to move fast without cutting corners.

At our scale, speed only matters if you can trust what you learn. The systems we built, templates, guardrails, and lightweight reviews, let teams move fast without cutting corners. That is how experimentation becomes part of the day to day, not an all-hands sprint — Alan Shiflett, GM, Domain Aftermarket and Specialty Brands

Conclusion

This year made something clear: scaling experimentation is less about the number of tests and more about the environment that supports learning. At GoDaddy, that environment strengthened as teams aligned around shared principles, trusted their systems, and treated every result as information that moves the organization forward.

If you’re building your own experimentation practice, start by creating clarity around how experiments are run and how learning is shared. Give teams the safety to explore and the tools to understand the impact of their work. When those pieces come together, experimentation stops being an activity—it becomes a way of working.

As we look ahead, GoDaddy is exploring a new framework that brings human and AI-driven experimentation closer together—shortening the time between an idea and understanding its real impact. Stay tuned for more learnings next year!