Key takeaways

- Domain auction users triggering DDoS protections during peak bidding created an infrastructure challenge that blocked legitimate traffic at the worst possible moment.

- Server-Sent Events enabled real-time auction updates through persistent connections instead of high-frequency polling, reducing API calls by over 90%.

- The SSE implementation improved bid conversion rates and user engagement while simultaneously reducing server load and infrastructure costs.

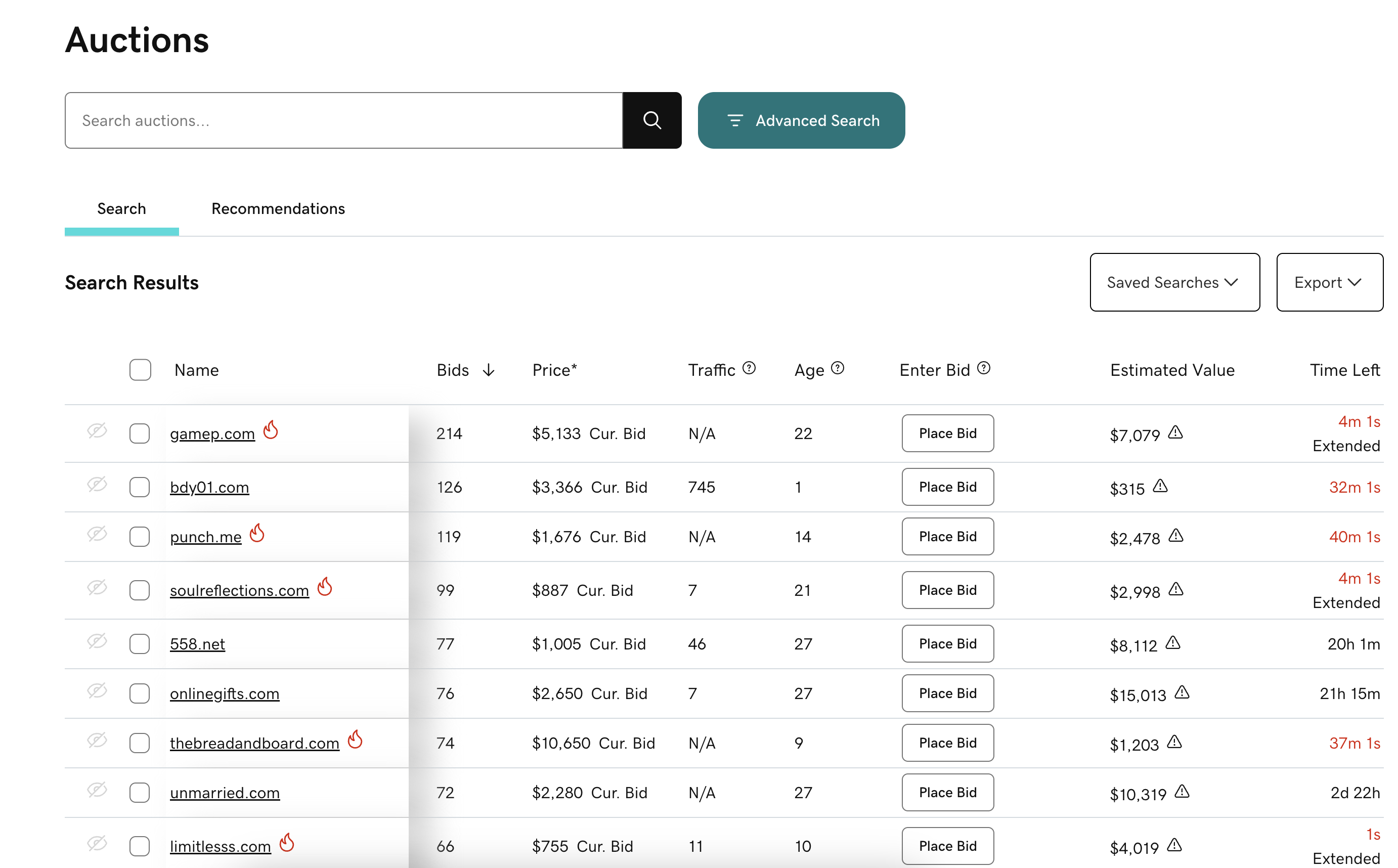

Every second counts in domain name auctions. The domain name aftermarket is a marketplace for expired domain names whose registration has lapsed at GoDaddy and our partner registrars. Users compete by placing bids on these domains to buy them.

As auctions got close to ending, our users naturally became more engaged: refreshing pages, monitoring bids, and staying alert for last-minute opportunities. In domain auctions, the final minutes are often the most critical, as bidders wait until the last moment to place their highest bids, hoping to win valuable domain names at the lowest possible price. This legitimate user behavior created an unexpected technical challenge.

We designed our auction platform frontend to provide timely updates by polling the backend API as auctions approached their end. As auctions neared completion, the frontend would make increasingly frequent requests to fetch updated data including current bid counts, latest bid amounts, auction time extensions, and listing status changes.

The closer an auction got to ending, the more frequent these requests became. With thousands of users watching many auctions simultaneously, this created request spikes that our CDN provider interpreted as DDoS incidents. Legitimate users got blocked with frustrating 403 Forbidden pages at the moments when they needed access most: during the final minutes of auctions they were actively bidding on.

The following image shows our auction listings page where users watch many domain auctions simultaneously:

Moving to server-sent events

To solve this challenge, we implemented Server-Sent Events (SSE), a web standard that enables servers to push live updates to clients over a single, persistent HTTP connection. Instead of clients repeatedly asking "has anything changed?", the server now tells clients "here's what just changed" as updates become available.

SSE provided a robust, browser-native solution for one-way communication without requiring complex WebSocket implementations. For our use case where we only needed to push updates from server to client, SSE was the perfect fit.

How SSE works

SSE operates with these key characteristics:

- Single Connection: The client establishes one long-lived HTTP connection to the server

- Server Push: The server sends updates to the client as events occur

- Automatic Reconnection: Browsers automatically handle connection drops and should randomize reconnection to prevent reconnection DDoS

- Lightweight Protocol: Uses standard HTTP with minimal overhead

The following example shows how a client establishes an SSE connection and handles incoming events:

// Client-side SSE implementation const eventSource = new EventSource('/api/events/subscriptions/{subscriptionId}'); eventSource.onmessage = function(event) { const auctionData = JSON.parse(event.data); updateUI(auctionData.listings); };

The client sends the appropriate headers when establishing the SSE connection:

Accept: text/event-streamCache-Control: no-cache

Our events API architecture

Our SSE implementation centers around a dedicated microservice that manages live auction data streams. When a user visits auction pages, the frontend creates a subscription by sending a POST request with the auction listing IDs they want to monitor. The system generates a unique subscription ID and stores it in DynamoDB with automatic TTL for cleanup. The client then connects to the SSE stream using a GET request, establishing the persistent connection for receiving updates.

From there, the service handles data streaming intelligently. Instead of making individual API calls for each auction listing, the system groups multiple listings together into fewer API calls, and uses Redis caching to track what data has changed since the last update. The change detection algorithm ensures we only stream data that has actually changed, minimizing unnecessary data transmission.

The system was designed to handle scale from the start. It can manage many users simultaneously, each with their own subscription, while safely handling multiple data updates at the same time. Pub/sub messaging handles TTL updates and subscription management, while automatic timeouts clean up expired subscriptions to prevent resource leaks.

Resource management was critical to prevent system overload. We implemented configurable subscription limits per user, with automatic cleanup of the oldest subscriptions when users exceed limits. TTL-based expiration with configurable refresh mechanisms ensures connections don't linger indefinitely, and handles client disconnections by cleaning up associated resources.

Implementation details

The following sections describe how we implemented our SSE solution.

Backend framework

Go's HTTP server automatically handles concurrent SSE connections, while we use channels and select statements for clean timeout and cancellation handling.

Server-side SSE implementation

This simplified version shows the complete SSE flow:

func SSEHandler(w http.ResponseWriter, r *http.Request) { // Set required SSE headers w.Header().Set("Content-Type", "text/event-stream") w.Header().Set("Cache-Control", "no-cache") w.Header().Set("Connection", "keep-alive") // Verify streaming support flusher, ok := w.(http.Flusher) if !ok { http.Error(w, "streaming unsupported", http.StatusInternalServerError) return } // Get subscription details subscriptionData := getSubscriptionData(r) for { select { case <-r.Context().Done(): // Client disconnected, clean up and exit return case <-time.After(500 * time.Millisecond): // Check for updates if updates := getUpdates(subscriptionData); len(updates) > 0 { // Encode and send as SSE event if jsonBytes, err := json.Marshal(updates); err == nil { if _, err := fmt.Fprintf(w, "data: %s\n\n", jsonBytes); err != nil { return // Client disconnected } // Flush to send data immediately flusher.Flush() } } } } }

This implementation demonstrates the complete SSE pattern:

- Sets required headers for SSE streaming

- Verifies the response writer supports immediate flushing

- Continuously checks for auction updates in a simple loop

- Formats data as proper SSE events (

data: nn) - Flushes immediately to push updates to the client in real-time

Connection management at scale

Managing thousands of concurrent SSE connections required careful attention to key areas:

Memory Management: Go's HTTP server automatically handles SSE connection concurrency. Within each connection, we use goroutines with sync.WaitGroup for parallel processing of auction data batches, and Go's garbage collector handles cleanup when connections close.

Connection Lifecycle: The handler detects client disconnections through context cancellation (r.Context().Done()) and automatically cleans up resources. TTL-based timeouts ensure connections don't persist indefinitely.

Resource Limits: We implemented configurable subscription limits per user, with automatic cleanup of the oldest subscriptions when users exceed limits, preventing resource exhaustion.

The impact

Immediately after rolling out SSE, we saw a dramatic >90% reduction in API request volume. More importantly, we eliminated the 403 responses that blocked users on the Auctions SERP page.

But the real validation came from the business metrics. We measured a +0.84% bid lift across all front-end auction surfaces and a +4.85% improvement in expired domain conversions. When users could reliably access auction data without getting kicked off, they stayed more engaged and completed more transactions.

From a user experience perspective, the change dramatically improved the experience. Users could now browse auction listings without unexpected interruptions, receiving seamless updates as auction data changed. The persistent connections meant reliable access during those critical final minutes of auctions, and the reduced bandwidth usage made the experience faster and more responsive across all devices.

Our infrastructure benefited too. The dramatic reduction in API calls substantially lowered server load, and the shift from thousands of individual requests to persistent connections improved our ability to handle traffic spikes.

Conclusion

By replacing high-frequency polling with Server-Sent Events, we transformed a frustrating technical issue into a smooth user experience. Our users now receive timely auction updates without getting blocked by DDoS detection systems.

The implementation proved that sometimes the best solution isn't the most complex one. SSE provided exactly what we needed: reliable, efficient data streaming with minimal complexity and broad browser compatibility.